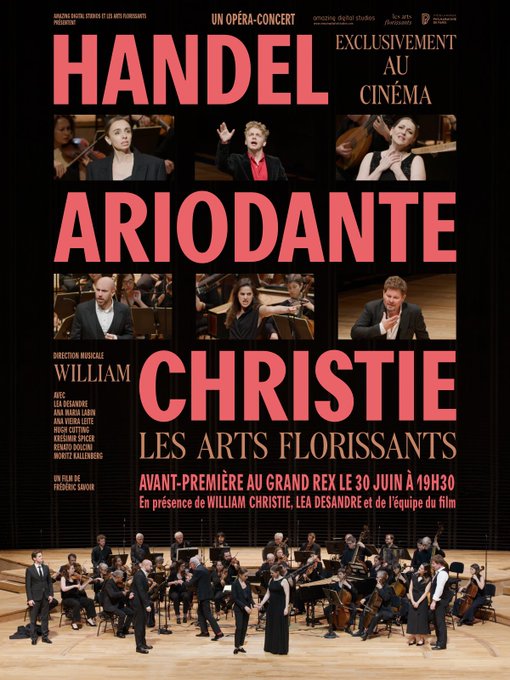

#Ariodante de #Händel par les Arts Florissants en opéra-concert-film est #amazing ; trois raisons, deux futiles.

- c'est 16 euros, un seul soir à Paris au cinéma le Grand Rex

- voir William Christie tout heureux esquisser un pas de danse avec Lea Desandre

- soyons sérieux : ce que le film #Ariodante de Frédéric Savoir apporte à un très bel opéra.

3a) Un film est écrit trois fois : au scénario, au tournage, au montage. Le scénario, c'est le livret,

la magie des Arts Florissants ET tout le travail préparatoire aux répétitions de l'ensemble, pour anticiper le dispositif filmique nécessaire pour le jour J à la Philharmonie de Paris, que les chanteuses, chanteurs et musiciens apprivoisent et oublient la caméra, et être sûr de capter toute la beauté de l'interprétation, car il n'y aura qu'un tournage...

détails que l'on voit rarement loin de la scène, même avec des jumelles de théâtre car on a qu'une paire d'yeux. Qu'on scrute la naïve Dalinda ou tende l'oreille vers la musique d'un violoniste, l'on rate le flûtiste au repos qui mime les paroles de Lurcanio, ou la violoncelliste

détails que l'on voit rarement loin de la scène, même avec des jumelles de théâtre car on a qu'une paire d'yeux. Qu'on scrute la naïve Dalinda ou tende l'oreille vers la musique d'un violoniste, l'on rate le flûtiste au repos qui mime les paroles de Lurcanio, ou la violoncelliste dont la gorge se noue à la folie gagnant Ginevra (Ana Maria Labin), la

posture fanfaronne petit-mec réjouissante de #Ariodante par Lea

Desandre. Au montage,

dont la gorge se noue à la folie gagnant Ginevra (Ana Maria Labin), la

posture fanfaronne petit-mec réjouissante de #Ariodante par Lea

Desandre. Au montage,Frédéric rend justice à toute cette vie qui bat dans les veines d'un superbe ensemble concertant.

, science

, science