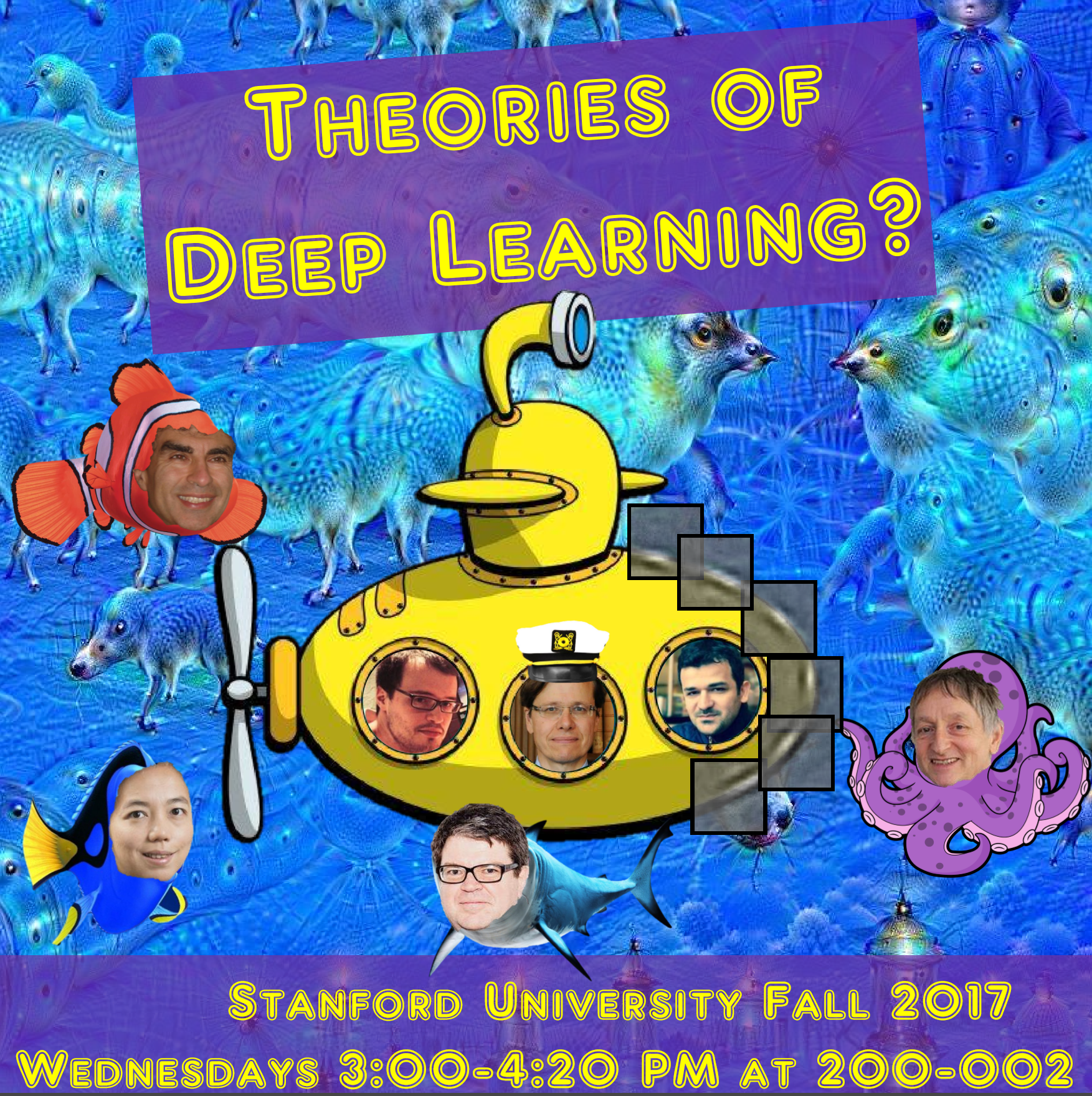

With a little sense of provocations carried by the poster, Stanford university STATS 385 (Fall 2017) proposes a series of talks on the Theories of Deep Learning, with deep learning videos, lecture slides, and a cheat sheet (stuff that everyone needs to know). Outside the yellow submarine, Nemo-like sea creatures depict Fei-Fei Li, Yoshua Bengio, Geoffrey Hinton, Yann LeCun on a Deep dream background. So, wrapping up stuff about CNN (convolutional neural networks):

The spectacular recent successes of deep learning are purely empirical. Nevertheless intellectuals always try to explain important developments theoretically. In this literature course we will review recent work of Bruna and Mallat, Mhaskar and Poggio, Papyan and Elad, Bolcskei and co-authors, Baraniuk and co-authors, and others, seeking to build theoretical frameworks deriving deep networks as consequences. After initial background lectures, we will have some of the authors presenting lectures on specific papers. This course meets once weekly.Videos and slides are gathered at follows.

- Theories of Deep Learning, Lecture 01: Deep Learning Challenge. Is There Theory? (Donoho/Monajemi/Papyan) : video, slides

- Theories of Deep Learning, Lecture 02: Overview of Deep Learning From a Practical Point of View (Donoho/Monajemi/Papyan) : video, slides

- Theories of Deep Learning, Lecture 03: Harmonic Analysis of Deep Convolutional Neural Networks (Helmut Bolcskei) : video, slides

- Theories of Deep Learning, Lecture 04: Convnets from First Principles: Generative Models, Dynamic Programming & EM (Ankit Patel) : video, slides

- Theories of Deep Learning, Lecture 05: When Can Deep Networks Avoid the Curse of Dimensionality and Other Theoretical Puzzles (Tomaso Poggio) : video, slides

- Theories of Deep Learning, Lecture 06: Views of Deep Networks from Reproducing Kernel Hilbert Spaces (Zaid Harchaoui) : video, slides

- Theories of Deep Learning, Lecture 07: Understanding and Improving Deep Learning With Random Matrix Theory (Jeffrey Pennington) : video, slides

- Theories of Deep Learning, Lecture 08: Topology and Geometry of Half-Rectified Network Optimization (Joan Bruna) : video, slides

- Theories of Deep Learning, Lecture 09: What’s Missing from Deep Learning? (Bruno Olshausen) : video, slides

- Theories of Deep Learning, Lecture 10: Convolutional Neural Networks in View of Sparse Coding (Vardan Papyan and David Donoho) : video, slides